What is AI upscaling?

What is AI upscaling? This handy development in TV picture processing is able to take content of a lower resolution than your TV’s own panel and optimize it to look better, sharper, and more detailed.

It may sound a lot like regular old upscaling, and you’d be right – the ‘AI’ part just means the upscaling happens with a greater awareness of context.

That’s because Al upscaling involves creating new pixels of image information to add detail where there wasn’t any before, filling in the gaps to recreate a higher-resolution image, all the while using machine learning to improve the result.

Handle this badly and it can look like overcooked picture sharpening, a feature seen in the menus of most TVs, and which we usually advise turning all the way down. But top TV brands – as well as Nvidia, maker of PC graphics hardware – now have compelling 4K and 8K AI upscaling techniques that elevate ‘AI upscaling’ beyond the meaningless marketing buzzword it might have been.

AI Upscaling FAQ

- What is AI upscaling? AI upscaling attempts to make sub-4K footage (or sub-8K in the case of 8K TVs) look more like video shot at your display’s native resolution.

- How is AI upscaling different to normal upscaling? AI upscaling is simply smarter upscaling. Both types prep video for displays with a higher pixel count, but ‘AI upscaling’ uses machine learning to improve the image.

- Who offers the best AI upscaling? Sony and Samsung are at the top of the AI upscaler league in the world of TVs. Nvidia’s Shield TV box also has very effective upscaling, should you have a 4K TV with a basic scaler but otherwise satisfying image quality.

Which TVs have AI upscaling?

All top-end TVs have a form of AI upscaling, even if the manufacturer doesn’t explicitly use the term ‘AI’.

Samsung’s AI upscaling is available in sets with its Quantum Processor hardware. These include the Samsung Q70, Q75, Q80, Q90 and Q900 series – meaning the new QN900A and QN95A are both included.

Sony’s top upscaling is called X-Reality Pro or XR Upscaling depending on the set you buy. 4K and 8K XR Upscaling are Sony’s best.

LG uses the term ‘AI Upscaling’ too, but for the best results you’ll need a TV with an α9 Gen4 or α7 Gen 4 CPU. These include its top OLED TVs and the higher-end LG Nanocell screens.

Panasonic does not talk about upscaling as much as its competitors, but its top TVs do have an ‘HCX PRO Intelligent Processor’, which makes comparable calculations.

How does AI upscaling work?

Take a piece of 1080p content. It’s designed for displays with a little over two million pixels. But 4K TVs have almost 8.3 million pixels, while 8K TVs pack in a staggering 33 million pixels.

The simplest way to fill in those additional pixels is doubling, where blocks of pixels repeat the same visual information, leading to block images that are better for retro games than they are for home movies. This is called ‘nearest neighbour resizing’.

Another approach is creating interstitial pixels, smoothing out transitions between pixels in low-res sources. A bright pixel next to a dark pixel in the source video would therefore be bordered by mid-level brightness pixels in the final upscaled image. This results in a soft image.

AI upscaling tries to work out what additional, ‘spare’ TV pixels should display using machine learning. Sony offers the best single-sentence summary, saying that “Patterns in images are compared with patterns stored in a unique database to find the best hue, saturation and brightness for each pixel.”

The most pleasing explanation of AI upscaling to the human brain is that these TV processors can recognise objects such as grass, fur or eyelashes and fill in the detail missing in the source image. But it would be more accurate to say upscaling is powered by algorithms that can recognise contrast patterns common in real-world scenes.

An upscaled engine takes cues from the source material to estimate what the footage would look like if it were shot in native 4K or 8K.

For example, grass seen in a 1080p scene will likely lose much of the clarity it would have at 4K. The smooth edges of each blade, and some green tonal contrasts, are lost but the “patterns” Sony and others use to develop their upscaler algorithms try to restore some of this information.

It is particularly useful for areas of high contrast, such as the outline of the iris in someone’s eye. A good AI upscaler will not just sharpen this border, but will thin out the transition between the darker iris and the white of the eye, while avoiding the halo effect that comes with over-sharpening.

Balance is key, as in all kinds of picture processing. Engineers at Samsung, Sony and their rivals will have tested more aggressive AI upscaler settings than we have today, before deciding on the final profiles in current TVs as the best balance of sharpness and detail versus a natural-looking image.

The best upscalers also look at the state of pixels across frames, to avoid upscaler-generated detail fizzing in and out of existence in a moving image.

AI upscaling is, at its core, simply a better way to sell upscaling that has improved in quite a predictable fashion, given several of the core techniques are also used in smartphone photography image processing. But that does not mean it is not important.

TVs have processors designed to make these calculations on the fly, which is why screens with older TVs can’t get over-the-air firmware updates to add ‘next-gen’ upscaling.

The real time aspect is also a limiting factor. TV processors are designed for a narrow set of jobs, but they are not all that powerful compared to, say, a high-end smartphone or a PC graphics card. Upscaling has to happen near-instantaneously, too, and every millisecond it takes adds to input lag.

What we said earlier about AI upscaling can make it sound like a kind of magic, but the limitations of time and processor power mean it is relatively cursory in reality. Still, it’s an effective technique that – when done right – only improves image quality and helps low-res sources look their best on high-res displays.

What about Nvidia DLSS?

Nvidia is the other major force in AI upscaling. It offers excellent 4K upscaling in the Nvidia Shield TV, and is a good option if you are not quite ready to buy a new TV. The Shield TV and Shield TV Pro are well equipped for upscaling, because they have 256-core graphics processors far more powerful than a TV chipset, allowing them to AI upscale 720p and 1080p content to 4K at 30fps.

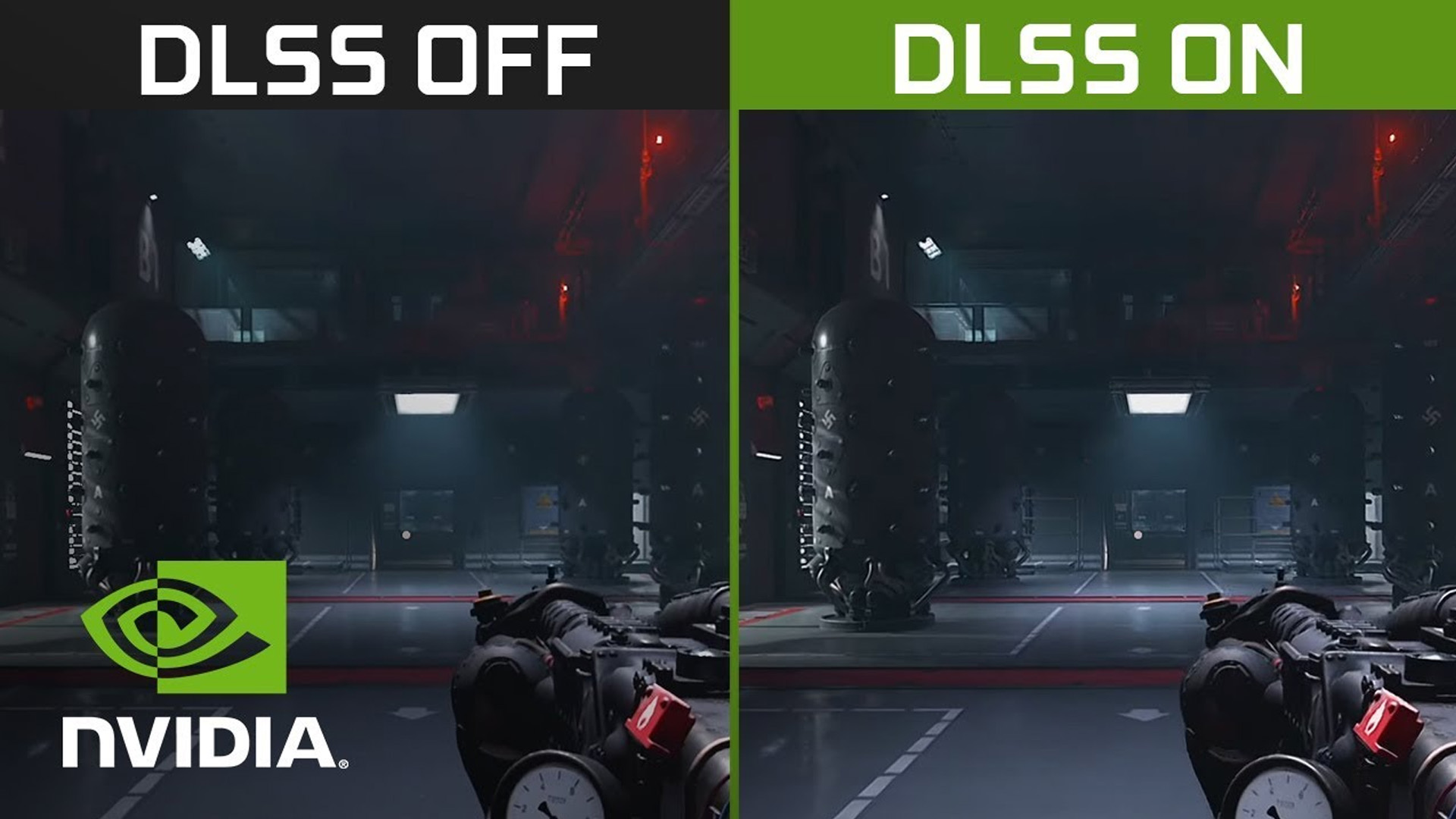

The company’s revolutionary DLSS gaming feature is also arguably a form of AI upscaling. DLSS stands for deep learning super sampling. It is used in Nvidia RTX 20 and RTX 30 series graphics cards, and lets a gaming PC run games at a lower resolution, while DLSS makes up the difference.

You can render a game at 1080p and see a final image that, to most eyes, will be near-indistinguishable from 4K. That means higher frame rates, and greater scope to turn on expensive graphical effects like ray tracing even if you don’t have one of the most powerful graphics cards.

Digital Foundry explored the differences between two recent versions of DLSS, 1.9 and 2.0, on YouTube. Give it a watch. It also serves as a good visual example of the sort of improvements different generations of AI upscaling can offer, even if AI upscaling in a game is not quite the same as AI upscaling of movies and other video content.

Want to do your own AI upscaling? Here’s how

Software packages for Mac and PC also offer standalone AI upscaling. These are not normally intended for real-time upscaling, but are great if you want to clean up some of your own videos.

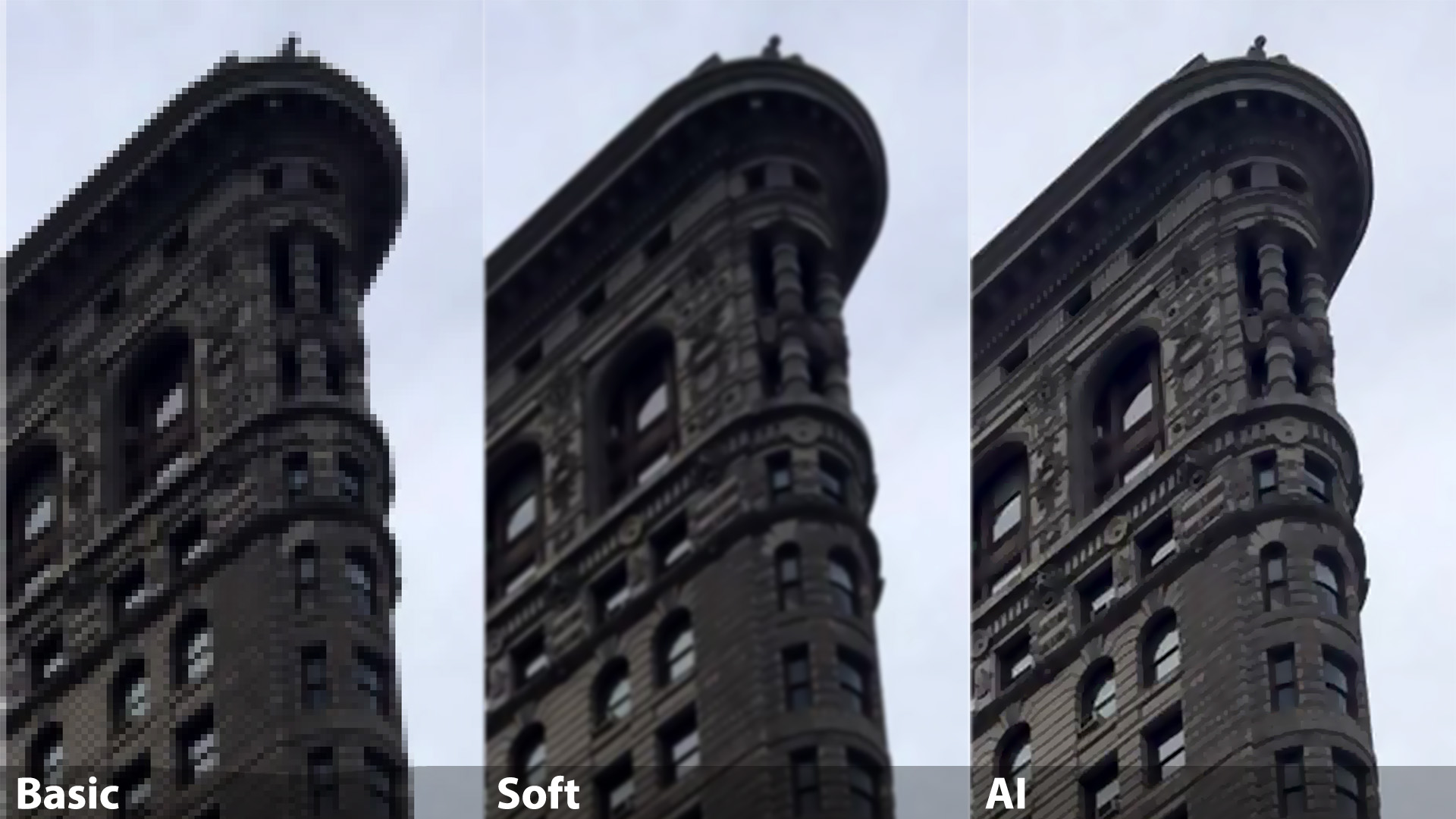

Topaz Labs Video Enhance AI is one of the best around. You drag and drop your files onto its interface, choose an upscaling profile, and let it do its thing. Different profiles use different AI styles, resulting in a different image character and upscaling intensity in the video below.

Topaz says upscaling from HD to 8K takes around two-to-three seconds a frame with an Nvidia GTX 1080 graphics card. Even with a powerful PC we’re not close to real-time processing. But this lets the software use more complicated AI processing, to push resolution-limited video that bit further.

- Check out the best TVs available today

from TechRadar - All the latest technology news https://bit.ly/3ojMiZE

No comments